I decided to write this article following conversation after conversation with other mental health professionals in which we all agreed that what we are asked to do by way of risk assessment is frequently profoundly unsatisfactory in ways that are hard to describe. There seems to be an official discourse of risk (e.g. Reference MadenMaden, 2005), which suggests that many adverse outcomes could be avoided if standardised, scientific risk assessments were more rigorously applied; and an unofficial discourse, characterised by a sense of low-grade anxiety about the official discourse and a worry that it distracts from what we think psychiatry is about.

‘Risk’ notoriously means different things to different people (Box 1). The usual understanding of risk in psychiatry – that it is a composite measure of probability and hazard – is a model first described in 1662 by the anonymous author of La Logique, ou L'Art de Penser, and its most famous example comes from a near-contemporary source, Pascal's Pensées. This is a statistical argument for the existence of God that has come to be known as Pascal's wager. We cannot know whether God exists or not and this cannot be known experimentally within our lifetime: the chance of God's existence is not known. However, we can imaginatively explore the consequences of not believing in God if He does exist (eternal damnation) or of believing in Him (salvation). The consequences (in the language of statistics, the utility) of salvation over damnation are such that even if there is only the smallest chance that God exists, a rational man must choose this belief. Pascal chose to wager that God exists, and in doing so he became the first in a long line to claim that statistical approaches can be instructive for ethical decisions.

Box 1 Five definitions of risk

Risk as chance

Risk is used by some (‘frequentist’) statisticians as a synonym for odds and is unrelated to danger. There is a ‘risk’ of winning if you buy a lottery ticket. In this article the term odds is preferred for this sense.

Risk as belief

Risk is used by some (‘subjectivist’) statisticians for belief in the possibility of a single event governed by Knightian uncertainty. There is a ‘risk’ of a stock market crash tomorrow. Subjective probabilities are the basis of Bayesian statistics: as new pieces of evidence are accumulated, the degree of belief in an event is revised accordingly. In this article the term used is subjective probability.

Risk as belief and risk as chance are sometimes elided because both are quantitive approaches to prediction: the Royal Society (1992) has defined risk as ‘the chance, in quantitative terms, of a defined hazard occurring’.

Risk as hazard or danger

Risk is sometimes used to mean any hazard or danger – for example the standard sign over high-tension power supplies warns ‘danger, electrocution risk’. In this article, the term hazard is preferred.

Risk as unacceptable hazard or danger

Risk (particularly in lay uses of the term) is used to mean unacceptable danger – for example in headlines that state ‘Hospital closures risk lives’.

Risk as a combined measure of chance/belief and impact

In this sense, risk is the product of a measure of the probability of an event (in psychiatry this is usually a subjective probability) and a measure of the impact of an event. This is the standard use of the term in many professional circles, including psychiatry. Although it can be expressed in numerical terms, in everyday clinical practice the probability is considered in terms of high, medium or low, as is the combined measure.

In the first part of this article I endeavour to unpick some of the assumptions behind risk as probability and the construction of risk as hazard. Readers who prefer to see applications before theory might like to reserve this section until the end: if so, go straight to the section ‘Risk: the acceptable form of stigma’, in which I introduce the concept of secondary risk before considering how some of these concepts are used in psychiatry today.

Modelling uncertainty

In the early modern world it was assumed that with complete knowledge of present states, the future would be known as a function of these states. A God's-eye view of the world would allow prediction of the future, and humankind was jealous to occupy this perspective. A major change in our world view over the past 300 years has been an acknowledgement of the degree of randomness in the world and the extent to which reductionist models fail to capture the truth of the world around us.

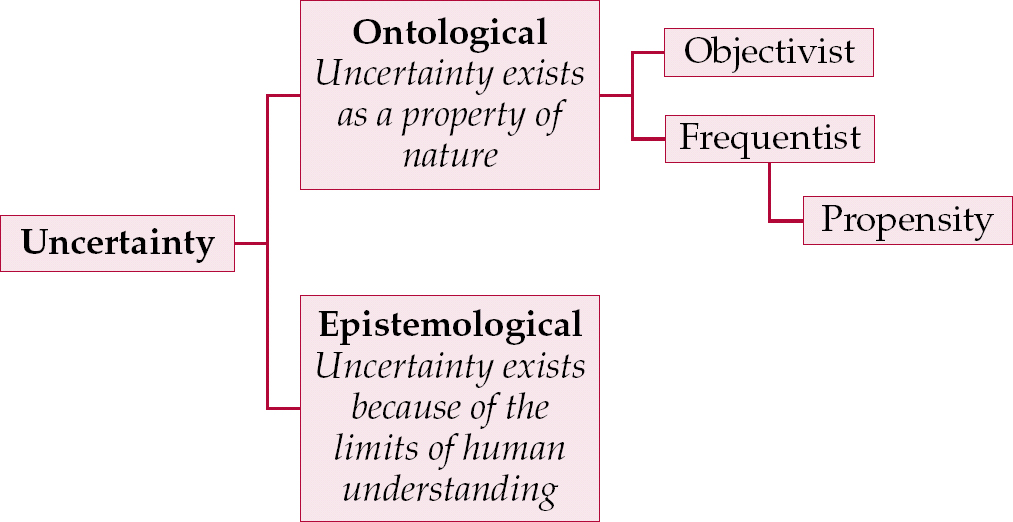

The ontological position

The objectivist model

Modern and postmodern models of the world fall into broadly two camps in describing uncertainty (Fig. 1). The first of these, the objectivist position, assumes that randomness exists in nature – that God plays dice. Statistical methods model this uncertainty. The view is often attributed to Laplace. Here, the probability of an event in a particular random trial is defined as the number of equally likely outcomes that lead to that event divided by the total number of equally likely outcomes. Bernoulli's law of large numbers states that if an event occurs a particular set of times k in n independent trials, then if n is arbitrarily large, k/n should be close to the objective probability of the event. However, there are objections to this definition (first raised by Leibniz), not least that objective probability is the probability deduced after an infinitely large number of trials – an abstraction and so not an objective property of the world.

Fig. 1 Models of uncertainty.

The frequentist model

The second camp is the frequentist one. The frequentist solution to the problem of objective probability being an abstraction is to define the true probability as the limiting outcome for such an experiment and do away with the idea of an objective probability.

Although the frequentist idea of infinite repetition of trials is an idealisation, it is, as Reference FonsecaFonseca (2006) points out, ‘enough to cause a good amount of discomfort to partisans of the objective approach: how is one to discuss the probability of events that are inherently “unique”?’ – such as the chance that the patient in front of me will take her own life within a certain time frame.

The propensity view

One alternative within the frequentist approach is the propensity view, most famously argued by Karl Reference PopperPopper (1959): probability represents the ‘disposition’ of nature to yield a particular event without it necessarily being associated with a long-run frequency. These propensities are assumed to exist in some real if metaphysical sense. A well-made die, for example, will have a propensity to roll a six 1 time in 6. This is as much a property of the die's design as are its colour and weight.

The epistemological position

An alternative to objectivist views of probability (whether frequentist or propensity based) asserts that randomness is not an ontological (objectively existing) phenomenon but an epistemological one: i.e., that randomness is a property not of the world, but of the tools we use to describe it. It is a return to the idea that there is a God who has a complete knowledge, but that human knowledge is incomplete. In this world view, randomness is a measure of ignorance. If we knew everything about a coin toss we could predict how it would land; as we do not, we model its behaviour as probability.

In a refinement of the epistemological position, Frank Knight famously distinguished measurable uncertainty, or true risk, from unmeasurable uncertainty. Knight refers to how large numbers of independent observations of homogeneous events, such as coin tossing, lead to an ‘apodictic certainty’ that the observed probability is true or as close to true as makes no difference. He contrasts this with real-world conditions, in which it may be impossible to assemble a large series of independent observations, a situation he prefers to describe as a condition of uncertainty. Knightian uncertainty prevails more often than risk (in the sense of odds or frequentist probability) in the real world:

‘[Any given] “instance” … is so entirely unique that there are no others or not a sufficient number to make it possible to tabulate enough like it to form a basis for any inference of value about any real probability in the case we are interested in. The same applies to the most of conduct’ (Knight, quoted in Reference BernsteinBernstein, 1996: p. 221).

For Knight, true risk is measurable, knowable and manageable: although roulette is a game of chance, the casino owner is certain of a profit. Uncertainty is a product of ignorance (Donald Rumsfeld's celebrated ‘unknown unknowns’). Under conditions of Knightian uncertainty, it is meaningless to speak of the odds that a judgement is correct.

The subjectivist paradigm

In 20th-century developments of statistical theory, probability takes a subjective turn to continue to be a useful tool. Instead of looking backwards at tabulated values of prior events to predict like events in the future, it is possible to look forward and have a belief about the future. The strength of this belief can be measured. For example, in a horse race, the spectators have more or less the same knowledge but place different bets because they believe different horses will win. The bets can be observed and this can then be used to infer personal beliefs and subjective expected probabilities – expectancies about the future. In this situation, the risk and probability are synonyms for degree of belief. (This is in many respects a return to an older meaning of the word probability, as the degree of belief or approval we give a statement – it is the way Pascal was using the term in his wager.) One variant of the subjectivist paradigm is the Bayesian, where the degree of belief in a hypothesis is revised as new information is received. Other developments include Von Neumann's game theory, which studies the choice of optimal behaviour when the costs and benefits of each choice depend on the choices of other individuals.

Subjectivist statisticians have made important contributions to cognitive science in the 20th century by showing how plastic subjective estimations of risk (subjective probability and hazard) can be. Our estimations of risk can be manipulated by changing the reference point, a phenomenon described by Reference Kahneman and TverskyKahneman & Tversky (1984) as ‘failure of invariance’. Most psychiatrists will be familiar with how this operates in practice: if one of their patients takes her own life, their risk assessments become more cautious and risk-averse for a time.

Risk: the acceptable form of stigma

The conventional utilitarian view of risk that is used in psychiatry models human agents (psychiatrists) as making subjective decisions about hazards and subjective probabilities within a realist paradigm – that is, that the hazards subject to risk assessment are in themselves ‘real’. However, this view of risk is not without its critics, who take instead a social constructivist view. Some hazards are identified as ‘risks’, becoming politically visible and actively managed; others are not. These judgements are historical and culturally specific. A leading exponent of this view is Mary Douglas. She argues not that dangers do not exist in the world, but that the crucial element in a danger becoming a ‘risk’ (hazard) is how it is represented and politicised. In particular, she argues that the risks that receive the most attention are those that are connected with legitimating moral principles: that in a sense, risk is a secular form of sin (Reference DouglasDouglas, 1992).

Psychiatric risk in Douglas's conception is related to otherness, the traditional locus of madness. In the 19th century, discourses of madness were used to police the boundary between societal self and other: the mad were confined in the great Victorian asylums. In the 21st century, discourses of risk are fulfilling a similar social function. Risk is the acceptable form of stigma: ‘high-risk patients in the community’ are a legitimate source of moral concern, even as political correctness makes it less socially acceptable to rail against ‘loonies’. The public view of risk as unacceptable danger ‘doesn't signify an all-round assessment of probable outcomes, but becomes a stick for beating authority, often a slogan for mustering xenophobia’ (Reference DouglasDouglas, 1992: p. 39).

Primary and secondary risks

Michael Power's paper for Demos (Reference PowerPower, 2004) draws attention to some of the dangers of ‘the risk management of everything’. Internal control systems that use risk management tools to manage Knightian uncertainty have an effectiveness that is, in principle, unknowable. Internal control systems are themselves organisational projections of controllability ‘which may be misplaced’:

‘In many cases it is as if organisational agents, faced with the task of inventing a management practice, have chosen a pragmatic path of collecting data which is collectable, rather than that which is necessarily relevant. In this way, operational risk management in reality is a kind of displacement. The burden of managing unknowable risks … is replaced by an easier task which can be reported to seniors’ (Reference PowerPower, 2004: p. 30).

Anxiety, as Power puts it, drives the risk management of everything. Power writes about business organisations, not mental health providers, although his conclusions about this neurotic organisational attempt to tame anxiety have a lucidity about how defensive processes work that often seems missing from psychiatric literature on risk: the processes themselves generate more anxiety as a secondary risk of attempting to manage the unmanageable.

Power's distinction between primary and secondary risk management is perhaps his most useful contribution to the debate about risk:

‘the experts who are being made increasingly accountable for what they do are now becoming more preoccupied with managing their own risks. Specifically, secondary risks to their reputation are becoming as significant as the primary risks for which experts have knowledge and training’ (Reference PowerPower, 2004: p. 14).

He goes on to discuss how conflicts between the demands of primary and secondary risk management can create hazards. Processes that concentrate on auditable process rather than substantive outputs ‘distract professionals from core tasks and create incentives for gaming’ (p. 26). In writing about business, he feels that there is something ‘deeply paradoxical about being public about “managing” reputation compared with committing to substantive changes in performance’ (pp. 35–36).

Although marketed in the name of outcomes, strategy, value and best business practice, the cultural biases that drive the new risk management demand a procedural and auditable set of practices because control must be made increasingly publicly visible and because organisational responsibility must be made transparent. In such a cultural environment, with institutions which tend to amplify blame and the logic of compensation, it is rational for organisations and the agents within them to invest in management systems with a strong secondary risk flavour’ (Reference PowerPower, 2004: p. 41).

Risk management is increasingly driven by secondary risks, even though it is ‘sold’ to stakeholders as management of primary risk. Individuals within organisations become anxious about the risk management tools they are given, then work to reduce this anxiety by offloading their own share of this risk. Power sees the result ‘as a potentially catastrophic downward spiral in which expert judgement shrinks to an empty form of defendable compliance’ (Reference PowerPower, 2004: p. 42), thus hobbling the expertise of the people most needed to manage the risk. Instead of useful expert opinions with which to guide action, we have more and more ‘certifications and non-opinions which are commonly accepted as useless and which are time-consuming and distracting to produce’ (p. 46). He goes on to describe how this is not simply risk aversity but often responsibility aversity. In different social arenas, there are different asymmetries between blame and credit: where rewards are higher and positive outcomes attractive, there may be more appetite for risk than where rewards are lower and there is blame for negative outcomes.

Power's prescription is to create a legitimate ‘safe haven’ for the judgement of the individual:

‘The policy and managerial challenge is to attenuate and dampen the tendency for control systems to provide layers of pseudo-comfort about risk. There is a need to design soft management systems capable of addressing uncomfortable uncertainties and deep-seated working assumptions, overcoming the psychological and institutional need to fit recalcitrant phenomena into well tried, incrementally adjusted, linear frameworks of understanding’ (Reference PowerPower, 2004: pp. 50–51).

Psychiatric risk assessment

In predicting adverse events in psychiatry, what kind of uncertainty is being modelled? Two kinds of risk assessment tool are used in psychiatry, the clinical and the actuarial.

Actuarial assessment tools

Actuarial tools use a frequentist approach to develop odds ratios that can be used in a Bayesian way to alter a prediction that a particular patient may, for example, take their own life. Thus, a paper on suicide in a population of individuals who repeatedly self-harm (Reference Harriss, Hawton and ZahlHarriss et al, 2005) gave the odds ratios of some common risk factors:

| Use of alcohol | 1.14 |

| Widowed/divorced/separated | 1.51 |

| Age 55+ | 1.79 |

The problem with such an approach is that even in high-risk groups, such risk factors have low predictive value because of the low prevalence of the predicted outcome: suicide is a relatively rare event. In this paper, examining a population of patients who had overdosed of whom 2.9% subsequently died by suicide, the sub-population with all three of the risk factors above had an incidence of suicide of 5.4%. These are major risk factors with relatively high odds ratios; many other commonly accepted risk factors (e.g. multiple episodes of self-harm, physical illness or misuse of drugs) often have odds ratios of less than 1.2. Aside from the cumbersome nature of actuarial tools (often requiring a computer), predicting which patients with these risk factors will survive and which will die by suicide remains largely a matter of Knightian uncertainty, and because of this actuarial tools remain largely the province of research rather than day-to-day clinical practice. (This distinction is important: I am not arguing against research. Uncertainty and risk are fluid, historical concepts and today's Knightian uncertainty may be tomorrow's manageable risk.)

Clinical assessment tools

Clinical risk assessments work more like expected utility or subjective expected probability (Reference FonsecaFonseca, 2006). They are a measure of the rater's strength of belief in the presence or absence of a particular risk factor. Typically, a number of risk factors (themselves often generated by frequentist methods) are presented in a table and the rater enters high, medium or low in each cell before coming up with a synthesised overall risk rating at the end. Such risk assessments notoriously overestimate the degree of risk.

Myths surrounding risk assessment

Both actuarial and clinical assessment tools contribute to myths about risk assessment, of which perhaps the most damaging is that people in high-risk groups are much more likely than others to die by suicide. In the actuarial example above, we saw that survival in the group as a whole was around 97%, falling to just under 95% in people with the three most important risk factors.

A second myth about suicide is that focusing resources on high-risk groups reduces the number of suicides. This belief is wrong for two reasons. First, most (86% according to the National Confidential Inquiry into Suicide and Homicide by People with Mental Illness; Reference Appleby, Shaw and KapurAppleby et al, 2006) suicides occur in low-risk groups, for the simple reason that although these groups contain individuals at lower risk, they also contain many more members than high-risk groups. Around three-quarters of people who die by suicide have not been in touch with mental health services in the year before their death (Reference Appleby, Shaw and KapurAppleby et al, 2006): no change in risk management policy for existing patients will affect this group. Second, there is no evidence that addressing risk factors as such has any impact on survival. This is a rather counter-intuitive proposition that requires justification. Very few interventions in psychiatry have been shown decisively to reduce the incidence of suicide: clozapine in schizophrenia, lithium in bipolar disorder and perhaps partial hospitalisation programmes in borderline personality disorder. None of these interventions is given for the primary purpose of reducing risk; they are given as effective, targeted treatments for specifically diagnosed mental illnesses. This distinction is important because giving undue weight to risk assessments draws us away from what Power might call our ‘core business’: treating mentally ill people in an effective and ethically justifiable way.

Secondary risk management

Following Power, I would suggest that the most important effect of clinical risk assessments is to increase the rater's anxiety and lead to what he calls secondary risk management by the rater: to a certain amount of gaming, self-protection and moral hazard to the organisation and the patient. Some of these consequences are quite clearly counter-therapeutic: for example, a patient may be detained because not detaining them produces intolerable anxiety in the staff involved in the assessment. Who in the field of mental health has not asked themselves ‘How would this decision play in court (or in the newspapers) if it went wrong?’ This is secondary risk management in action. It changes the way healthcare professionals approach patients’ risk, and very rarely in the patients’ favour. Similar consequences arise with other decisions: when to grant hospital leave, when to report a fragile family to social services because a child is at risk, when to breach confidentiality.

Although specific decisions are regularly influenced by secondary risk management, I would argue that it also has a corrosive effect on the relationship between the clinician and the patient. The clinician who is engaging in secondary risk management is often obliged to justify within terms of primary risk management. When a doctor constructs a patient as a source of threat to their professional legitimacy, they have stopped acting as a doctor to that patient. I suspect this is the source of the disquiet of the many clinicians I spoke to who feel uneasy about risk culture.

Inappropriate use of risk assessment tools that leads to an amplification of the anxiety of the assessor has another problem. If doctors fail to contain their own anxieties about patients adequately it makes it far more difficult for professionals to reassure wider society about the true risks that patients pose. This can lead to the situation where doctors reduce both their capacity and their inclination as a profession to resist wider social agendas that threaten to harm or restrict patients in the name of risk management.

Reducing the risks of risk assessment

Risk assessment tools are currently rather blunt instruments with the potential to harm patients, the organisations in which they are cared for, and the staff that work there. What can be done to attenuate this risk?

First, we need to recognise that (faulty though they are) risk assessments are here to stay: we do need to continue to use risk assessments where appropriate, and to continue to fund research that will permit better quantitive risk assessments – such as the National Confidential Inquiry project. Beyond this, though, is a need within organisations to apply what Power calls a second-order intelligence about when to use risk assessment tools. Where a knowledge base is less certain – the conditions of uncertainty rather than defined odds that apply in much of psychiatry – risk assessment is possible but problematic and may carry its own risk. This should be recognised by healthcare provider organisations.

Organisations should be explicit not just about the types of risk they are managing but also about the conceptual models they are using to manage it. This may protect against some of the dangers of inappropriate risk assessment.

Protecting the patient protects the staff

The best way to manage second-order reputational risks is to perform core activities to a high standard. This means that organisations should take steps to protect their staff from the necessity of second-order reputation management where this interferes with core tasks of patient care. In a patient-centred service, risk should primarily (but not exclusively) be seen in terms of the risk faced by the patient. A risk assessment should be a consensual process, with the patient and clinician striving towards a common language and conceptualisation of the risk, then deciding how best to manage it, not a stigmatising moral discourse.

Holding the patient's anxiety about his or her risk is one of the core tasks of mental health provider organisations that must be performed well. Psychiatric risks (chiefly violence to the self or other) are manifestations of suffering, and addressing the suffering is the primary way psychiatrists and other mental healthcare workers should address risk. This means the provision of a safe setting for trust and therapeutic closeness to the patient.

Anxiety containment

A key core task that is often impaired by risk assessment is management of institutional anxiety. Contagion of anxiety about risk in mental health-care settings is currently at epidemic proportions. Containment of patient anxiety of necessity involves containment of staff anxiety. At the beginning of the 21st century, this should not really be news to psychiatry (Reference Main, Main and JohnsMain, 1957). Detailed and complex risk assessments that amplify anxiety should be curtailed, perhaps in favour of models using bounded rationality heuristics (Box 2). Established technologies for managing staff anxiety (such as clinical supervision) should be prioritised as part of good clinical governance.

Box 2 Bounded rationality

Bounded rationality assumes that humans are not completely rational in their decision-making and that this can be adaptive. For example, it may have greater utility to make a decision on less information rather than expend time and energy on accumulating more information of marginal utility.

Risk assessments need to be seen as valuable but vulnerable professional decisions in the face of uncertainty. They will on occasion be wrong. But wrong in retrospect does not necessarily mean blameworthy. Shame and blame as a reaction to a risk assessment that is proved wrong worsens further the anxiety amplification that risk assessments create. The principles for investigating critical incidents are well known and the National Confidential Inquiry is in this respect a model organisation: its process is voluntary, confidential, non-punitive, objective and independent. Although these five characteristics are well-known to be crucial in building mechanisms for organisations to learn from experience (Reference VicenteVicente, 2004), local incident-reporting procedures and public inquiries into critical incidents in the National Health Service rarely meet more than one or two of these criteria. Building these characteristics into incident reporting protects staff from some of the burden of reputation management.

MCQs

-

1 Pascal's wager:

-

a is a bet that God plays dice

-

b is a bet that God exists

-

c is a bet that you will always eventually lose in a game of roulette

-

d is an excuse not to go to church

-

e is a bet that God not only plays dice, He sometimes confuses us by throwing them where they can't be seen.

-

-

2 The propensity view of chance:

-

a is associated with the name of Frank Knight

-

b assumes that chance events are a property of the natural world

-

c assumes that chance is unmeasurable

-

d assumes that chance is unknowable

-

e assumes that an infinitely large number of observations need to be made before the true probability of an event can be stated.

-

-

3 The subjective expected probability of an event is:

-

a objective pieces of knowledge about the world

-

b always shared between different observers

-

c commonly used by bookmakers

-

d meaningless in conditions of Knightian uncertainty

-

e independent of the way that information about the event is presented.

-

-

4 Secondary risk management:

-

a is an actuarial method of risk management

-

b is driven by anxiety about risk management

-

c is an important way of reducing the number of suicides in the UK

-

d as a concept originated in the field of aviation

-

e always works in patients’ interests.

-

-

5 An important characteristic of a critical incident investigation is that it is:

-

a involuntary

-

b open

-

c punitive

-

d independent

-

e subjective.

-

MCQ answers

| 1 | 2 | 3 | 4 | 5 | |||||

|---|---|---|---|---|---|---|---|---|---|

| a | F | a | F | a | F | a | F | a | F |

| b | T | b | T | b | F | b | T | b | F |

| c | F | c | F | c | T | c | F | c | F |

| d | F | d | F | d | F | d | F | d | T |

| e | F | e | F | e | F | e | F | e | F |

Declaration of interest

None.

eLetters

No eLetters have been published for this article.